Recently we were approached for a photogrammetry consultancy by the Australian Institute of Marine Science (AIMS) for some advice on processing hardware. They were struggling to process the underwater datasets used to record the Great Barrier Reef.

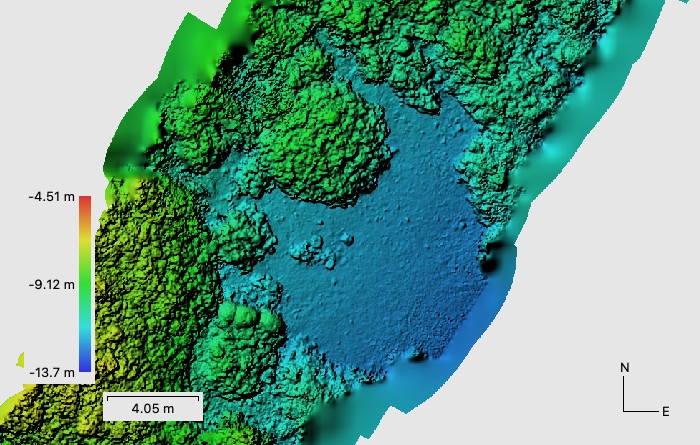

Consequently, creating a baseline record of the reef is important. Tracking changes over time helps understand the health of an incredibly important ecosystem that is under pressure from climate change. AIMS needed to create and analyse the transects over the reef before the next set of images were gathered.

The sheer volume of images in their datasets were beyond reasonable processing time and their current hardware & infrastructure could not cope. Could we review and recommend kit – RAM, GPUs and network etc – that could process and deliver orthophotos faster?

More Hardware?

The first step of this photogrammetry consultancy was to review the process used to gather the images. AIMS were using a towed array comprising of six GoPro cameras with some post processing to merge the images with GPS data. We set the cameras to shoot on an interval timer as we moved the rig over the reef, capturing thousands of images in a very short time.

From our own experience we know that sinking feeling when images refuse to align thanks to “the one” missing overlap. But the technique immediately rang a few alarm bells. Calculating the overlap showed there just might be a significant amount of redundant overlap.

From our work with the US National Parks Service we know the value of multiple cameras but as the GoPros were not synchronised we couldn’t use the scaling advantages stereoscopic cameras offer. We might gain some marginal improvements in processing by using multiple cameras, but it wouldn’t help in this case.

A New Approach

Could we reduce the volume of images and preserve output quality and alignment?

The first sample dataset in this photogrammetry consultancy arrived and comprised of 3570 images. After processing the mesh covered 114m2 of reef and the ortho photo was achieving 1.7mm2 per pixel…but the processing time for a reasonably small area was horrendous and took more than the standard 1 cup of coffee.

It was time to take a step back and look at what Metashape really needs, and how could we cut the processing time by culling redundant images. Would the proposal end up spending nothing on hardware?

The step killing efficiency was alignment. In this Photogrammetry consultancy, we asked: How could we speed up this step? Could we maintain the quality of the outputs? Metashape has a Reduce Overlap tool that can set the number of cameras used and disable unwanted ones, but that needs a mesh…and that needs alignment.

Taking a Step Back…

Jose took a step back at this point of the photogrammetry consultancy. After a little thinking, he came up with a new workflow to produce a low quality mesh. Upon which Reduce Overlap could work its magic. During testing we found the 3570 images in the dataset could be culled to 270 (Yes…really…) and deliver comparative results. Mr photogrammetry (as we call Jose) had delivered the goods again.

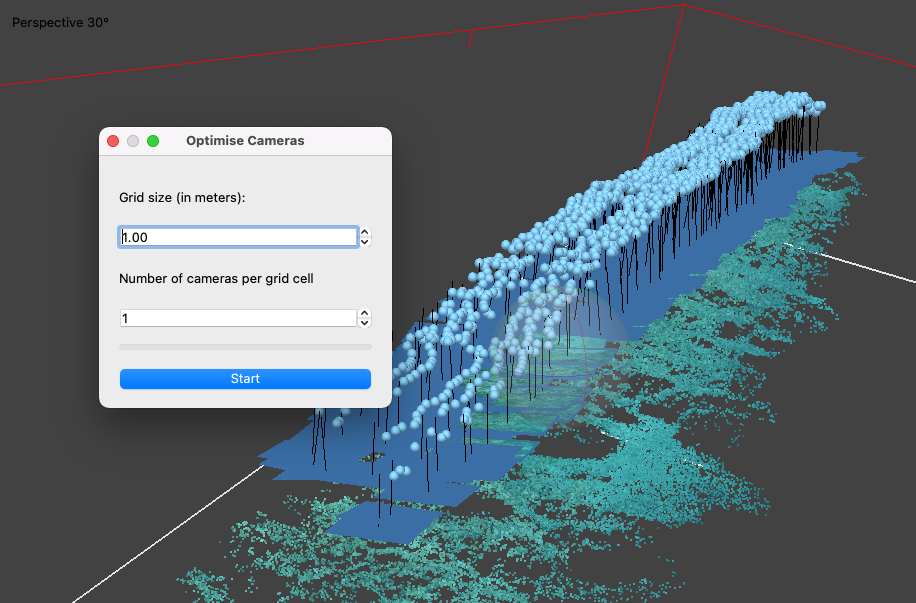

A second look at the issue saw our python team disappear for a few hours and produce a script that diced up the pre-aligned images into cells based on their GPS values. The system would select the camera nearest the center of the square and disable the rest.

This method would cull the initial alignment down to around 500 images. Running this before Jose’s new workflow improved speed and refined the number down to the 270 really needed.

After a few enhancements such as user input cell size, number of images per cell, error checking and warnings of missing GPS values, the script was delivered along with the new workflow.

We will leave the summary to the customer:

The optimisation script alone was worth the investment so very happy with the outcome and the service…

Why We Enjoy Photogrammetry Consultancy

Consequently, this is the kind of work we relish. A challenge is what Jose and Simon need to keep the curious minds busy. We view every Photogrammetry consultancy as an opportunity to increase the applications and possibilities in this burgeoning field.

In this photogrammetry consultancy, we have not asked about the budget allocated for new hardware. However, we remain confident the consulting services were significantly lower.

It’s a tiny contribution but every little counts. The reef could benefit in the long term if we use this approach more often.

The Workflow of a Photogrammetry Consultancy

This workflow is a game-changer for anyone gathering massive datasets by human eye.

No one wants to leave the scene with a missing image or two. However, processing everything can then become a waste of time and resource.

We are so pleased with this new way of working its going to become a module in our Professional training course. Given that, we have a sneaking suspicion all former and current students will benefit from this way of working. Also, we will add the script as a download in the training module.

We will email students when the new module is ready.

Diving deeper into Photogrammetry

Are you looking to dive deeper into photogrammetry and explore its applications more thoroughly? Then do please consider our Agisoft endorsed Metashape training courses.

Do you have a photogrammetry issue that would benefit from some Photogrammetry consultancy services? Please use the contact us page to get in touch – we would love to hear from you.

2 thoughts on “Photogrammetry Consultancy: Scripting the Value”